First, our method:

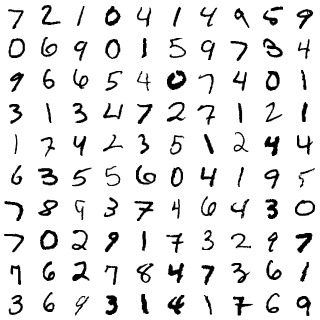

-- create 100 mnist test images (using the test-csv): $ ./mnist-data-to-images.py 100 -- filter the sw-file from yesterday down to the layer-1 results (that is all we need): $ cd sw-examples/ $ grep "^layer-1 " mnist-100--save-average-categorize--0_5.sw > mnist-100--layer-1--0_5.sw -- phi-transform the test images (with a run-time of 62 minutes): $ cd .. $ ./phi-transform.py 10 work-on-handwritten-digits/test-images/*.bmp -- make pretty pictures: $ cd work-on-handwritten-digits/ $ ls -1tr test-images/*.bmp > image-list.txt $ montage -geometry +2+2 @image-list.txt mnist-test-100.jpg $ ls -1tr phi-images/* > image-list.txt $ montage -geometry +2+2 @image-list.txt mnist-test-100--phi-transform-mnist-100-0_5.jpgAnd now the results. First the 100 test images:

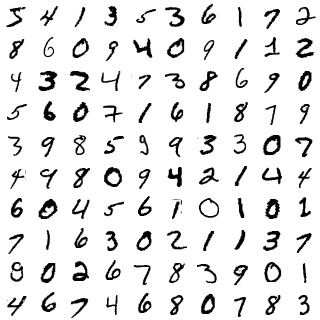

Now, after the phi-transform:

So, it is sort of normalized a bit. But I'm not sure it is a useful improvement. This phi-transform only used the average-categorize-image-ngrams from 100 training images. What if we ramp that up to 1000? And of course, there are two other variables we don't know the best values for. The image-ngram size, and the average-categorize threshold. Currently we are using 10, and 0.5 respectively. Also, I don't know if the phi-transform is better or worse than just doing a simple Gaussian blur. See my edge-enhance code for that. And of course, there is always the possibility that average-categorize won't help us at all, but I suspect it will.