So today, I finally implemented edge-enhance in python. Interestingly enough, I had a BKO version many months back, but it took over 20 hours per image!! So I did a couple of test images (Lenna and a child), and then never used it again. The details and results of that are here. Thankfully the new version only takes seconds to minutes, depending on how large you set the enhance factor, and the size of the image.

The general algo for my edge-enhance code is:

Gaussian smooth the image k times, where k is our enhance-factor subtract the original image from that then massage the resulting pixels a littlewhere the 1D version of the Gaussian smooth is (which rapidly converges to a nice bell curve after a few iterations):

f[k] -> f[k-1]/4 + f[k]/2 + f[k+1]/4and the 2D version is:

def smooth_pixel(M,w,h):

r = M[h-1][w-1]/16 + M[h][w-1]/16 + M[h+1][w-1]/16 + M[h-1][w]/16 + M[h][w]/2 + M[h+1][w]/16 + M[h-1][w+1]/16 + M[h][w+1]/16 + M[h+1][w+1]/16

return r

Here is the code, but probably too large to include here.The usage is simply:

Usage: ./image_edge_enhance_v2.py image.{png,jpg} [enhance-factor]

if enhance-factor is not given, it defaults to 20.Now, some examples:

Lenna:

child:

smooth gradient:

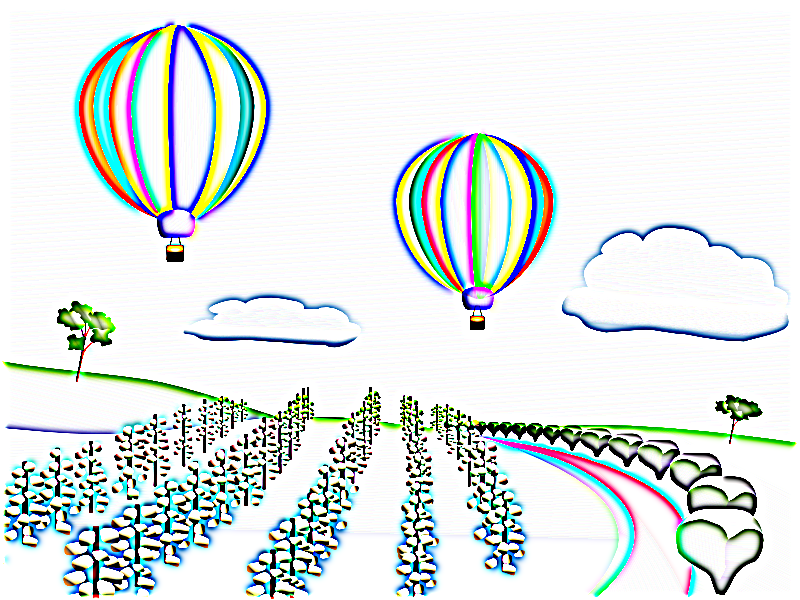

air-balloons:

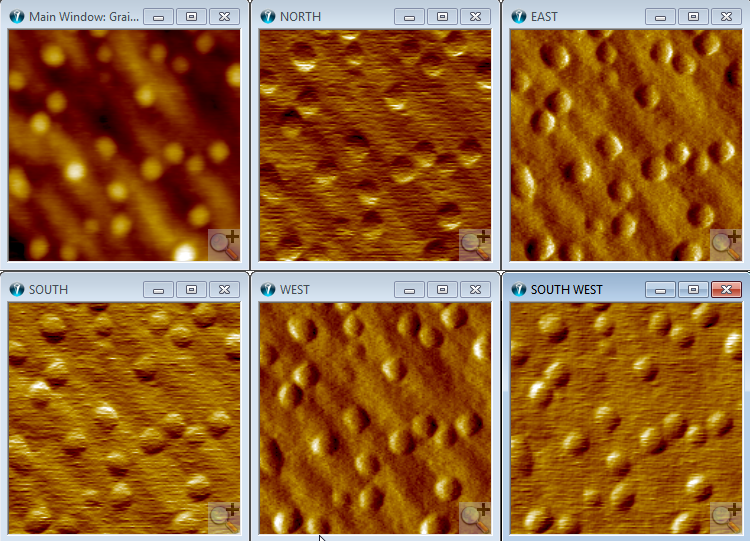

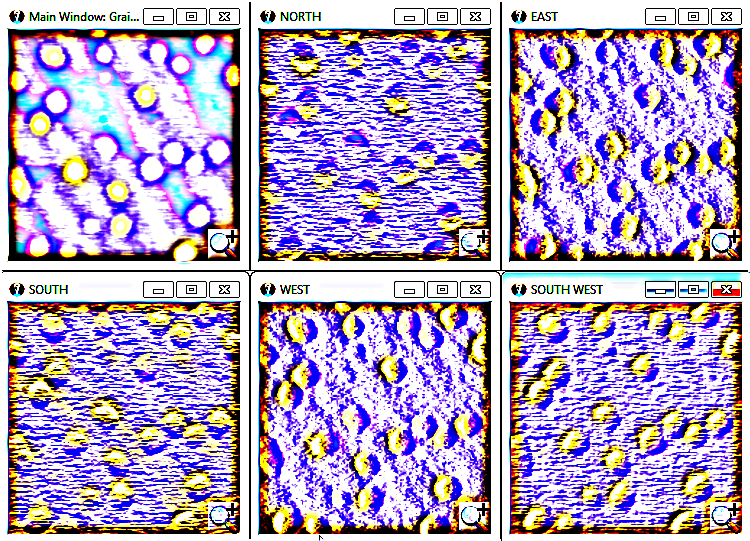

FilterRobertsExample:

So, from those examples we can see it works rather well! And if not quite well enough, you can ramp up the enhance-factor.

Now, some comments:

1) the code neatly maps regions of the same colour, or smoothly varying colour, to white, as our smooth-gradient example shows. This is because the Gaussian smooth of smoothly varying colour is pretty much unchanged from the original image.

2) it seems to work a lot better than first or second order discrete derivatives at finding edges. Indeed, for k iterations of smooth, each result pixel is dependent on all pixels within a radius of k of that pixel. Discrete derivatives are much more local.

3) larger images tend to need larger enhance-factor. So maybe 40 instead of 20.

4) the code sometimes won't load certain images. I don't yet know why.

5) the balloon example shows the output can be sometimes like a childs drawing. I take this as a big hint that the human brain is doing something similar

6) "smoothed_image - original_image" is very close to something I called "general-to-specific". I have a small amount of code that implements this idea, but never got around to using it. The idea is say faces. In the process of seeing many faces, you average them together. Then the superposition for a specific face is this general/average face subtract the specific face. My edge_enhance is very close to this idea, but with a smoothed image in place of an average image (though a smoothed image and an average image are pretty much the same thing). Indeed, babies are known to have very blurry vision for the first few months. So there must be something to this idea of programming neurons with average/blurry images first, and then with sharp images later.

7) the last example, taken from here, hints that maybe this code is actually useful in say pre-processing images of cells looking for say cancer cells?

8) potentially we could tweak the algo. I'm thinking the massage_pixel() code in particular.

def massage_pixel(x):

if x < 0:

x = 0

x *= 20

x = int(x)

if x > 255:

x = 255

return 255 - x

9) the first version of my code had a subtle bug. After each iteration of smooth the result was cast back to integers, and saved in image format. Turns out this is enough to break the algo, so that a lot of features you would like enhanced actually disappear! Also had a weird convergence effect, where above a certain value, the enhance-factor variable didn't really change the result much. After some work I finally implemented a version where smooth kept the float results until the very end, before mapping back to integers. This was a big improvement, with little features now visible again, and the convergence property of enhance-factor went away. If you keep increasing it, you get markedly different results. Interestingly my original BKO version did not have this bug, as could be seen by different resulting images, and that was the hint I needed that my first python implementation had a bug.10) Next on my list for image processing is image-ngrams (a 2D version of letter-ngrams), and then throw them at a tweak of average-categorize.

Update: I now have image-ngrams. Code here. Next will be image-to-sp, then tweak average-categorize, then sp-to-image. Then some thinking time of where to go after that.

Also, just want to note that image-edge-enhance is possibly somewhat invariant with changes in lighting levels. Need to test it I suppose, but I suspect it. Recall we claimed invariances of our object to superposition mappings are a good thing!

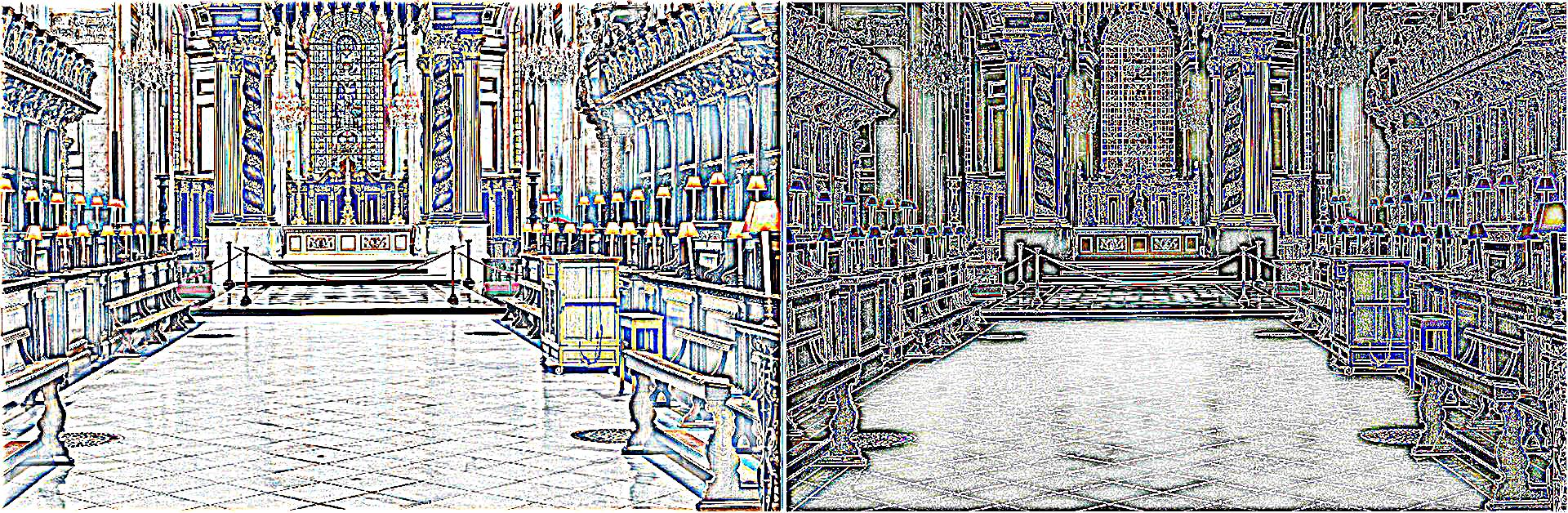

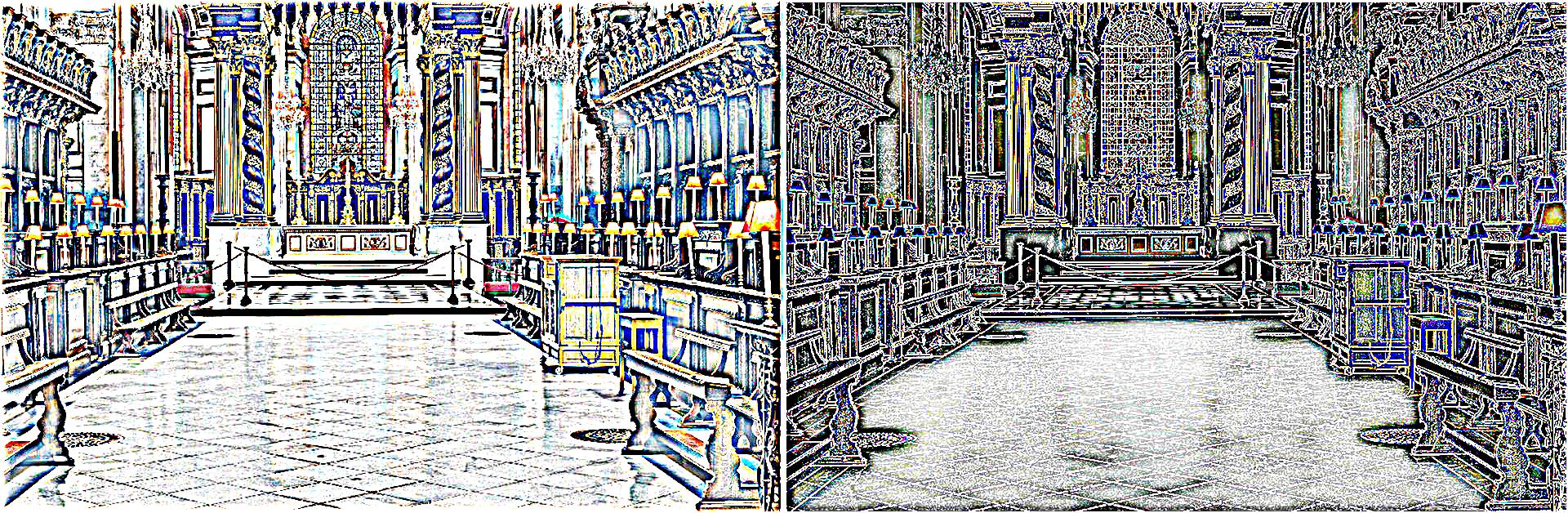

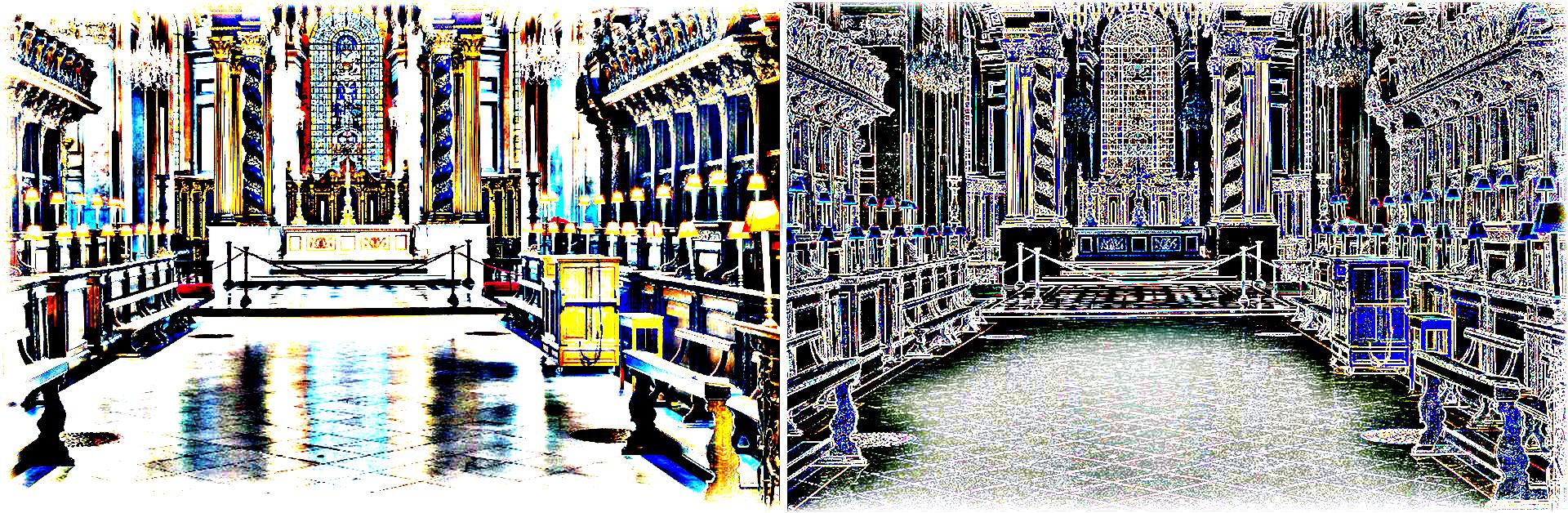

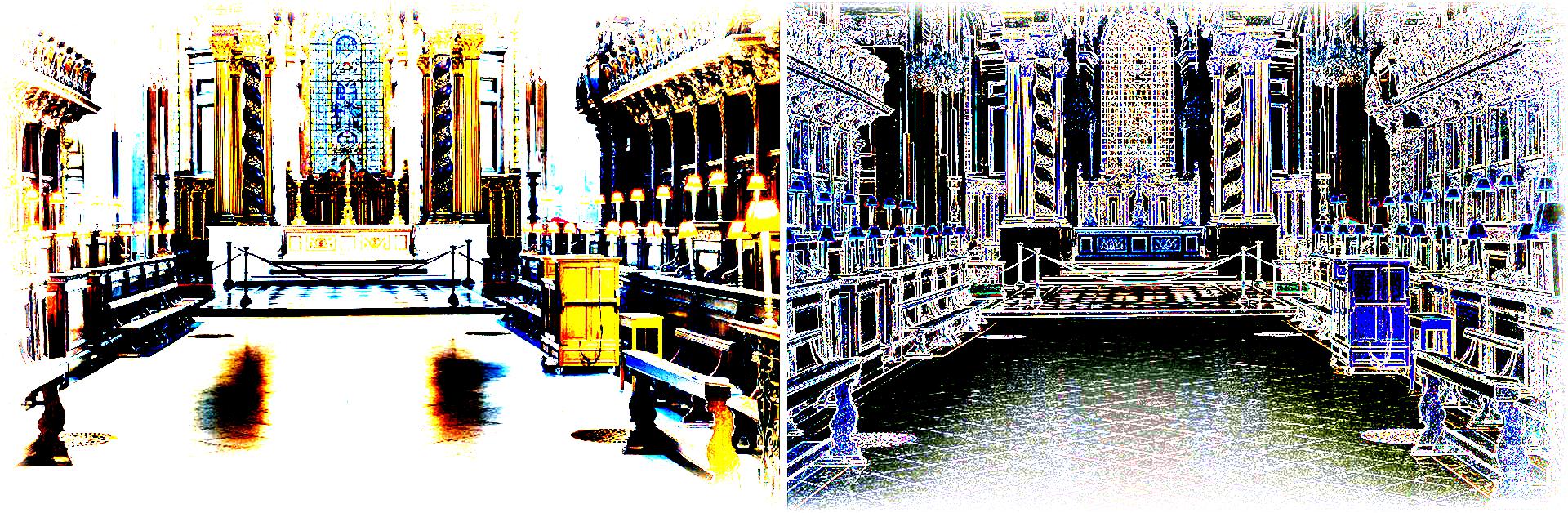

Update: a couple of more edge enhance examples from wikipedia: edge-enhancement, and edge-detection (first is the original image, the rest are edge enhanced):

For this last set, view the large image to see the details and the differences between different edge enhancement values.

No comments:

Post a Comment